AI SEO (Search Engine Optimization), also referred to as “GEO (Generative Engine Optimization)” or “AEO (Answer Engine Optimization), is a set of on-page (on your website through content) and off-page (on other websites) techniques that enable top result placement in LLMs (Large Language Models). Alternatively, AI SEO could mean “AI-enabled” SEO. I will touch briefly on this latter topic, but the main purpose of this article is to help marketing professionals and do-it-yourselfers leverage the increasing percentage of search captured by LLMs.

Before we get into it, this AI SEO guide was handwritten by a 15-year SEO professional, Nate Wheeler. Nate owns the professional agency weCreate, in addition to a number of other businesses, and hosts a business podcast.

You can find more about him here: Nate’s LinkedIn.

AI SEO can mean two things, so to ensure you’re reading the right article for you, I want to primarily focus on ranking in AI search results. AI-enabled SEO, on the other hand, refers to utilizing AI tools to write your content, do your keyword research, and post your content. If you’re interested in the second topic, I’ll give you some of my thoughts to start:

I don’t trust AI tools to reliably produce the results I need - yet. In fact, I don’t think it’s even close.

Certainly, many companies out there are using unedited AI articles to rank, but for anyone who really cares about the accuracy of their content and connection to their audience, it’s just not an option. Even keyword research is best done by hand (if you know what you’re doing). For example, the best an AI tool is going to do for you is to provide an estimated keyword difficulty, which is rarely accurate. To accurately estimate organic keyword difficulty, the process is to search the keyword, analyze the on-page and off-page SEO of the top ranking search results, and then see how far off from those competitors your authority and content are. If it’s a matter of content optimization or creation, then you write the article; if it’s a matter of authority, then you pick a different keyword. Now obviously, you can build backlinks to gain authority, but that takes time. The process I just described is something I probably shouldn’t even publish because many agencies don’t understand that. Even less so do SEO research tools that offer keyword difficulty metrics.

This step by step AI SEO guide describes the process of ranking in AI search engines. The most popular of these new LLMs is ChatGPT (I specify “new” because technically Gemini is Google, which has greater usage), but a host of others (my current favorite being Perplexity because you can switch between models and modes) might include Gemini (Google), Bing Copilot, Grok, Claude, DeepSeek, and many more.

Since it would take infinite time for me to write about each one of these, here’s a chart that gives a summary of each and an oversimplification of what you need to do to rank in results.

| Model | Advantages | Disadvantages | Ranking Techniques |

|---|---|---|---|

| GPT-4o (OpenAI) | High reasoning, large context, conversational, robust citation handling | Cost, limited open access, prefers authoritative sources | Entities, authority signals, reputable citations, formatted answers |

| Gemini 2.5 Pro (Google) | Multimodal, seamless search integration, native schema support | Less transparent outputs, prioritizes Google-indexed content | Schema markup, Google-authority, topic coverage |

| Claude 4 Opus (Anthropic) | Strong summarization, low hallucination, ethical filtering | Less realtime data, some citation omission | Clarity, neutrality, explainability, trusted citations |

| Grok 3 (xAI) | Fast responses, open integration, current data feeds | Occasional factual drift, less rigorous citation use | API-style format, entity tagging, timely content |

| Llama 4 Maverick (Meta) | Open-source, flexible deployment, community support | May lack enterprise-grade citation rigor, less moderation | Clear entities, internal links, open data references |

| DeepSeek R1 | Strong code/data support, technical niche strength | Limited general domain reasoning, mostly developer use | Technical documentation, code blocks, precise schemas |

| Mistral Medium 3 | High-speed, low latency, European data handling | Some language limitations, not as robust in citations | Topic silos, multi-language entities, concise formatting |

| Qwen 3 (Alibaba) | Strong Asian language support, multimodal input | Less coverage of western content, variable transparency | Multi-language markup, region-specific entities, visual schema |

| OpenAI o3 | Developer focus, real-time docs, API optimization | Single-language bias, less general use | API docs, clear heading structure, technical FAQ |

Remember, Google is one of the original gangsters of the LLM group. They’ve created some of the most advanced algorithms and AI processes to produce search results. The difference is really in the formatting. Your new language models are going to produce conversational and interactive search results to a greater extent than traditional Google.

If you look at the chart above, you’ll find some of the ranking factors to be the same as you’ll find in traditional SEO. Why is this? The answer simplifies AI SEO to a great extent:

“LLMs use traditional search engines as a data set. Just like a human user, they would prefer not to have to crawl through 100 pages to get to an answer.”

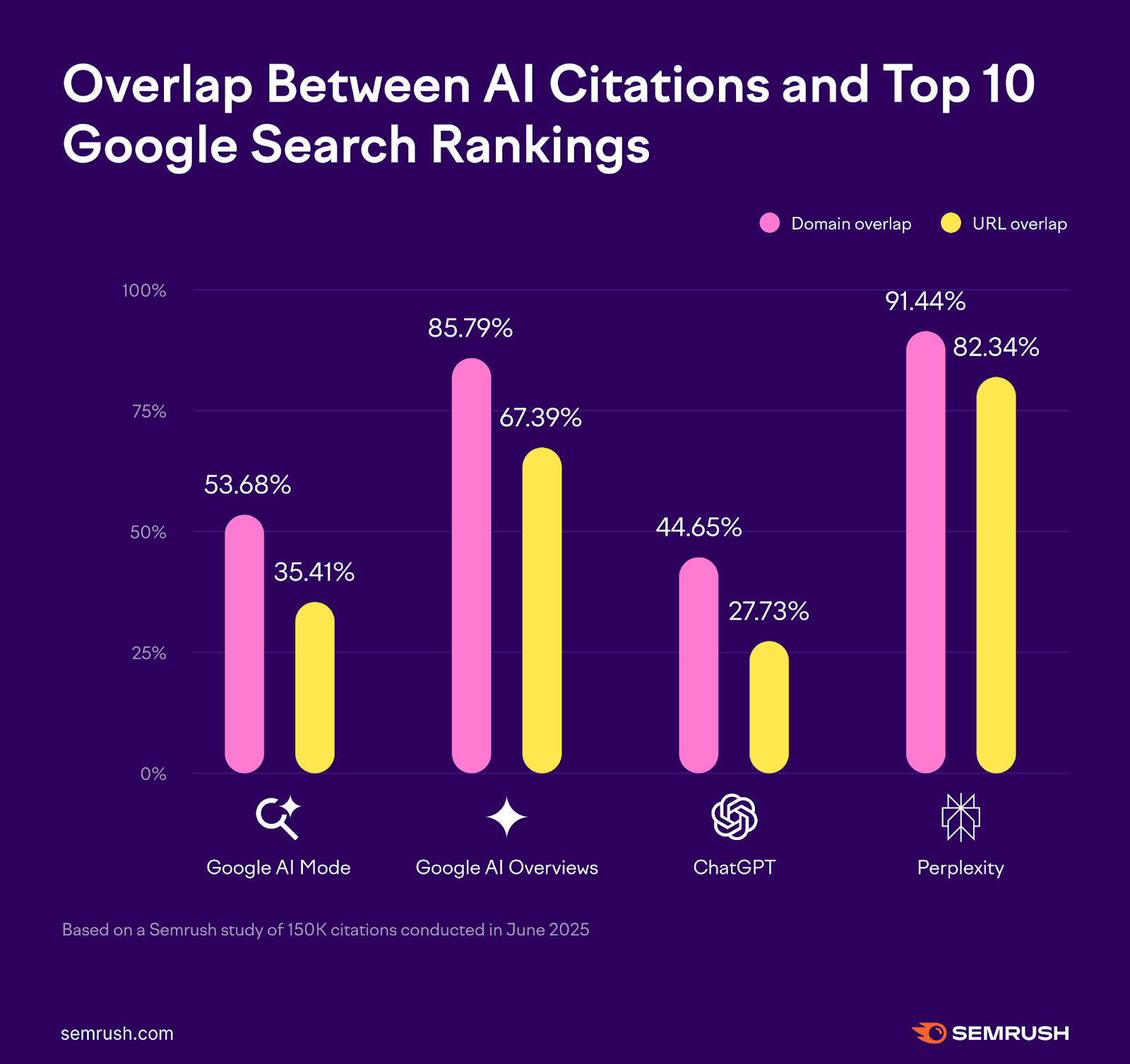

In other words, to rank in GPT, you need to perform many of the same actions you would while optimizing for Google. In fact, you can see a strong correlation between the top search results in Google and in major LLMs, with the chart below showing the overlap between domains and URLs in both. To further explain, the websites and pages Perplexity, for example, cites in answers, are almost always the same as the top 10 search results for the same query.

Some of the models (ChatGPT in particular) diverge significantly from the top 10 Google search results. We are about to dig into why this is, and how you can perform LLM-specific AI SEO to ensure you’re appearing in the models you care most about. First, though, we need to decide which models you SHOULD care about.

The image below demonstrates the percentage of search captured by each model. Keep in mind that your target audience may have a higher or lower chance of using any of them.

The table demonstrates that in most cases, we are going to care primarily about Google’s Gemini, GPT/Copilot, and Claude in that order. Perplexity deserves honorable mention because you can switch between models inside their platform. Starting from the top, here are the main ranking factors for each and their comparison to traditional SEO:

| Model (Platform) | Search Volume Share (%) |

|---|---|

| Google Gemini (SGE, Gemini) | ~81.6% (traditional + AI) |

| ChatGPT (GPT-4o, GPT-4.5) | ~9.0% |

| Perplexity (various LLMs) | ~0.02% |

| Claude (ClaudeAI) | ~2.2–2.5% |

| Bing Copilot (GPT-4o + others) | ~4.1% |

| Grok (xAI) | <0.1% |

| Llama 4 Maverick (Meta) | <0.05% |

| DeepSeek R1 | <0.05% |

| Mistral Medium 3 | <0.02% |

| Qwen 3 (Alibaba) | <0.02% |

| BLOOM / GPT-NeoX-20B (Open-source) | <0.01% |

Google Gemini will most likely pull from top search results, meaning that if your website doesn’t have Authority (mostly having to do with backlinks, explained more below), then even with the most perfect topic coverage, you won’t end up in the AI overview. The simple answer to “how to rank in Gemini AI overview” is: rank in Google. The way to do this is to have more and better backlinks than your competitors, and to have more and better content than your competitors (sounds easy, right?). For the additional item “schema markup”, I’d refer you the this article for the sake of brevity.

Key tips for ranking:

I see far more differences in ranking factors with GPT (compared to traditional SEO) than I do with Gemini; however, GPT is also crawling Google search results, so there’s more similarity than difference.

Entities are defined relationships and differences between objects. You can think of entities as “nouns” - people, places, and things. For an example of an entity, study how I started this article. I defined the term “AI SEO”, I defined other ways to refer to it, and I differentiated it from a similar topic that you may have been looking for. You might also think of this as simply clarity in writing (See Claude below).

Authority signals are citations in other websites’ content (otherwise known as backlinks). If you are featured in a publication, directory, partner’s website, or many other types of sites, you have backlinks (assuming there’s a clickable link included). These authority signals are going to be exactly the same as in traditional SEO. Use a tool like MOZ (free trial link) to research competitors, identify their backlinks, and replicate when possible.

Reputable citations are essentially the same as backlinks. I would think a little more along the lines of a reputable association related to your business, or a place that lists “ ‘whatever you do’ companies”. This item differentiates significantly from traditional search optimization because many of these lists offer no-follow backlinks, which would normally do little to nothing for your ranking.

Answer: You’re reading one right now! A formatted answer in an article or page anticipates the exact question someone will ask and provides them with the answer. This type of “Question & Answer” formatting is perfect for LLMs to crawl because most inquiries in those models are formatted as a long-tail question, which the LLM is charged with answering.

The main method of implementing formatted answers in an article is by starting with a broad topic, listing the top questions the reader will likely have, and then thoroughly answering each of those questions.

Take the following steps:

(Obviously or not, you don’t have to use the words “answer, supporting context, or implementation when constructing your own.)

Claude’s ranking factors (to capture a meager 2.2 percent of search) are merely a rewording of what we would consider writing best practices. The outliers here are “neutrality” and “explainability”

Neutrality, as Claude considers it, is presenting information without a biased conclusion. Clearly, neutrality is only neutral to those who agree with the non-biased conclusion, but that’s beside the point. Essentially, they’d prefer answers that most major sources agree with, and they’d like those answers to have credible citations. I’ll give you an example of a bad answer and a good one.

Neutrality is in the eye of the beholder. Claude will most likely reward the content and answers that regurgitate the already captured media sources and search engines, framing the “neutral” answer as the indisputable truth when it’s only the available truth.

Neutrality is the principle that content provides value to a searcher when information is presented factually without an emotional appeal.

Explainability is a feature of Claude and others’ programming, designed to reflect an increasing desire to make LLMs transparent in their reasoning. Essentially, LLM users want to know “how did you come up with that answer?”. If they understand the logic behind it, then they trust the result. Explainability is also a crucial feature for those placing safeguards to prevent AI takeover! I’d love to get into that topic, but not today. So how does explainability as a feature translate to a technique for ranking?

Explainability fundamentals:

Provide citations, Q&As, step-by-step instructions, and clear content hierarchy (h1s, h2s, etc.)

Example of Explainability:

One concrete example you’ll find in this article is the “What models should we focus on” topic, supported by a chart that shows the percentage of searches. A reader or LLM can easily see why I decided to go further into Gemini, GPT, and Claude.

See the above discussion under “Gemini” for trusted citations, and as for clarity, if you are leveraging explainability in your article, then you are 90% of the way there. The only other concept to understand is: Could a person who’s not familiar with the subject matter quickly grasp the concept you’re conveying, and be able to follow your argument?

With this in mind, you may use the structure of this article since I’ve done a reasonably good job of this by defining unfamiliar terms, spelling out acronyms, and using a logical progression (I hope).

The Future of SEO will be wildly different from what it is today because the goal will change. Currently, the goal of SEO is to drive traffic to an individual website. In 5-10 years, the goal will not be that at all. In fact, I believe that we will consistently see less and less human traffic on our websites. The LLMs in existence benefit from keeping traffic and eyeballs on their platform. Picture this: In 2030, a person uses ChatGPT because they are seeking a specific product or service. Right now, you will probably see a result in the LLM that gives you the top 5 or 10 providers, then you navigate away to investigate them. You may ask the LLM to give you more specific answers about each, but in the end, you complete the transaction or request for quote in a separate location.

In the future scenario, the language model will understand the exact criteria that meet your needs, and you’ll be able to complete any transaction or inquiry directly from the LLM interface. Here’s the kicker, though, the LLM still needs to reference a source that it believes is credible. We need to think along the lines of credibility and thoroughness. This goes back to traditional marketing - understanding buyer personas, and completely alleviating all of their concerns so they can decide on the spot. Currently, website owners can still hold onto the hope that if a website visitor doesn’t see what they’re looking for, they will reach out and ask a question. That option simply won’t be available in a future world because it takes too much time.

Tips for future success:

Implementing these tips will ensure that you are providing an LLM with all of the information it needs to promote you to a potential buyer. Also, keep your eye out for the future of contact form or purchase integration with LLMs. I’m not currently sure what this will look like, but there will have to be a method to authorize a model like ChatGPT to complete an inquiry on behalf of the human.

AI SEO is not a complete script flip from traditional SEO. As we’ve discussed, the techniques and best practices that I and other professionals have followed over the past 5 years have translated well into LLMs, and many of the organic Google rankings have turned into top citations in ChatGPT and other models. To put it into a sentence: Write excellent content backed up by research that anticipates your audience’s questions and needs, and ensure you are actively implementing a backlink building strategy. My last tip might be the most valuable! Reverse engineer the search results in LLMs. See what information they are referencing when composing an answer and copy it!

If you found this detailed AI SEO guide for 2026 helpful and are excited about putting some of it into action, then good luck to you! Please share the article and give us a follow on LinkedIn. If you need some help taking over LLMs and taking down the competition, then I look forward to hearing from you!